TLDR; market is overly focused on improving LLMs, like how many parameters they have, context size, or even achieving AGI. However, none of these advancements will define our future. The real transformation will come from the software surrounding the LLMs. This software will control, orchestrate, and apply the right business rules, driving meaningful change. Even if we achieve true AGI, its impact will likely be far less disruptive than the market anticipates.

Join me in exploring the future and discovering how software can leverage these powerful new tools we call LLMs.

LLM under the hood

The major players in the AI field have begun discussing AGI and even predicting when it might arrive. However, we don’t even have a clear definition of AGI. How can we aim for a goal that isn’t properly defined?

Whatever AGI may be, I’m convinced LLMs will never create as humans using the current transformer architecture. It’s clear that AI doesn’t actually think, but it mimics writing, drawing, and even music. It’s just mimicry, but on a masterful level.

These systems possess a mathematical superpower, something humanity never thought possible before. It’s fascinating to see creativity and personal style translated into complex mathematical formulas. These formulas have become so intricate that we struggle to trace backward and understand why specific outputs occur. However, difficulty doesn’t equal wizardry, the process remains deterministic, precise, and limited to the data it was trained on and context.

Generative AI is a Fancy Mimic, and is that ok

Of course, it started by imitating a writer’s style, musical compositions, and jokes. All of these seemed harmless, but they were just the initial versions. Rapidly, people began using AI to mimic physical training routines, nutritional plans, and travel itineraries that directly impact real lives. This mimicry hasn’t stopped there, and there’s no sign of it slowing down.

For me, the significant milestone will be when LLMs start mimicking procedures and protocols, such as onboarding documents, sales pitches, help desk tickets, and incident protocols.

Many companies have countless documents, both internal and external, for onboarding, client communication, task execution, and complaint procedures. This is exactly where AI excels mimicking processes at scale.

Don’t get me wrong; I still believe AI will replace many jobs. We tend to overestimate the complexity of our work, but the reality is that many people simply execute processes and follow protocols. We need to learn how to create, measure, adapt, and migrate between protocols, these are the skills that allow us to outperform AI.

Our role will be to build protocols and set the direction while AI executes, observes, and reports. We will connect knowledge, create businesses, and experiment with new ideas. We will achieve greater results, our work will have a larger impact, and we will need fewer people to accomplish the same outcomes. This process will keep building on itself, cutting down dependencies, clearing obstacles, and boosting productivity even more.

All that productivity won’t come from AGI, there’s no wizardry in mimicry by LLM. It’s simply another tool, much like the printing press, which drastically enabled the scaling of writing. Since LLMs are digital tools, the best way to use and scale them is through software.

Why AGI is Not the Future We Think

There are limits to what an LLM can do, even with AGI. To achieve real-world impact and be more effective, LLM needs to operate within a business model. On its own, the LLM is just a number predictor that transforms these numbers into text, images, or audio. However, it can't connect directly to banks, calendars, or other services by itself.

The model isn’t just the .bin or .h5 file downloaded from Hugging Face. It’s part of a larger platform that includes features like moderation, guardrails, function calls, structured outputs, and reasoning. These capabilities depend on the supporting software built around the model.

Even though LLMs can perform some external requests, we can’t rely on them alone. Function calls may suggest right actions, but even if they work flawlessly, they can’t handle all edge cases, failures, queues, rate limits, differing protocols, or unusual standards. These are just a few challenges tied to external requests.

In terms of architecture, LLMs can’t directly interact with databases, manage event queues, manipulate files, or establish connections. They are unaware of anything other than themselves. To overcome these limitations and achieve real solutions, robust infrastructure and software are key to true integration.

I can go on by a lot, but you get the idea. An LLM by itself is limited to its trained data and prompts. However, with engineering, it can achieve what was previously impossible or prohibitively expensive.

image 1 - The Meeseeks: Blue humanoid creatures that exist solely to fulfill one task and then cease to exist. They are incredibly effective but often hilariously overwhelmed when given overly complex or existentially difficult tasks.

The best use of AI is to mimic processes and human behavior, while the best use of software is to structure and create deterministic use cases. Neither replaces the other, but together they complement each other. When combined, they become powerful allies in solving complex problems.

The Role of Software in Scaling AI

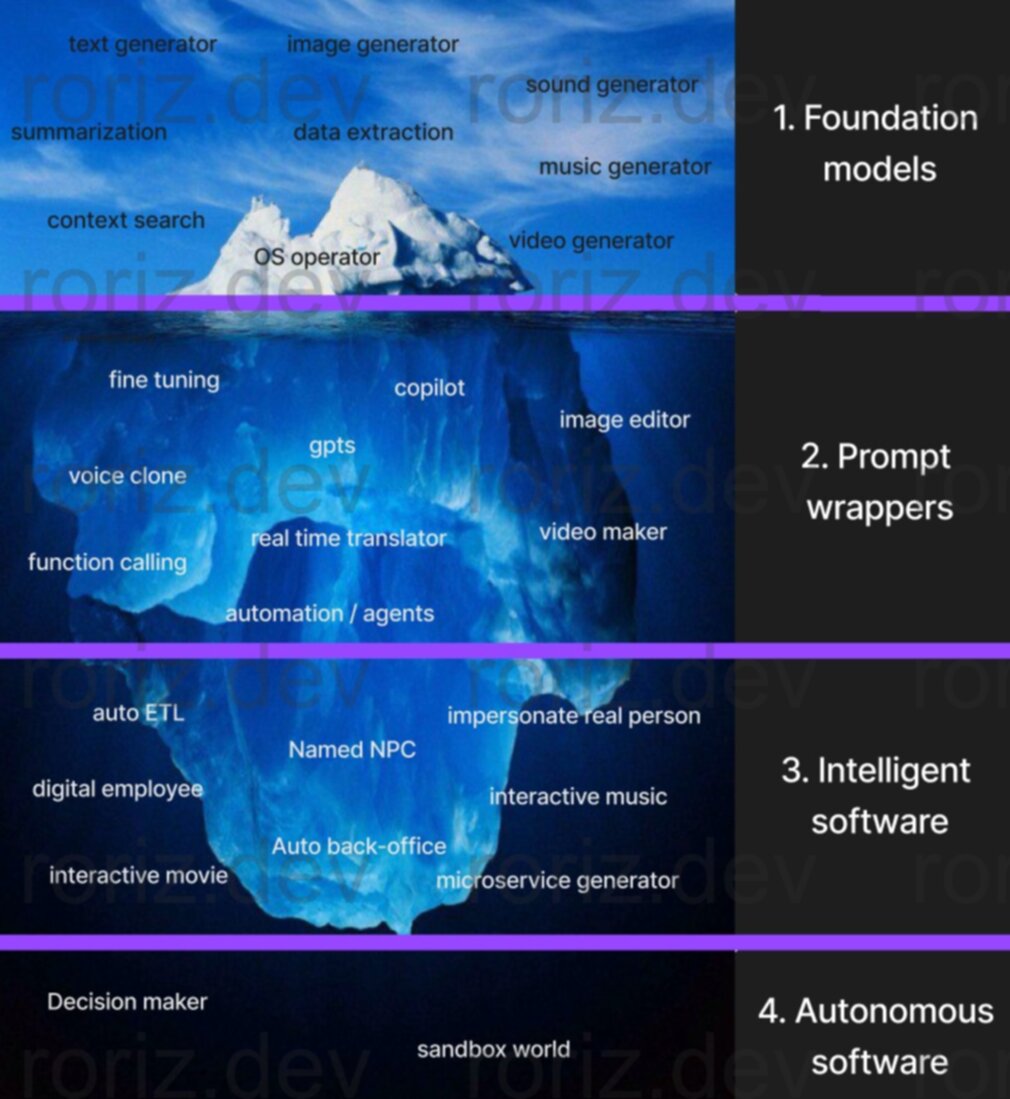

We are just beginning to explore the potential of allying AI+Engineer. Right now, almost all solutions are closely tied to foundation models. While many companies are working on second-level solutions, including those that owns the foundation models, we have yet to see clear dominant players emerging in this space.

As we go deeper into AI applications, the need for functionalities, consistency, integrations, and business rules becomes more evident. These are challenges that only software can fully address today.

Image 2: Iceberg of potential AI fields, starting with 1. foundation models, 2. prompt wrappers, 3. intelligent software, and 4. autonomous software.