TL;DR Software has traditionally been overly deterministic, even in cases where businesses don’t require it. Being more flexible and creating new way to input is how AI has transformed software development. I demonstrate this through a series of articles discussing the possibilities offered by LLMs.

I’ll explore how the data extraction capabilities of LLMs enable categorization, moderation, and easily data transform, among other tasks. Integrating AI+Engineer can significantly impact industries like social media and marketplaces. Every technique has its ideal time and place, and I’ll provide examples and discuss trade-offs to highlight effective applications.

The Old Way vs. The New

Before LLMs, data extraction relied heavily on tools like regex, specialized machine learning models, and keyword searches. Businesses often marked text fields as unreliable and difficult to process, often calling them "garbage in, garbage out." Businesses that rely heavily on user content often establish human moderation, like those at Glassdoor and Facebook.

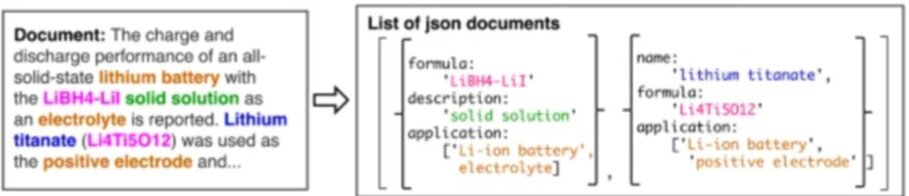

Image 1: Diagram showing text with some highlighted words, being converted into structured data.

Another common strategy involved leveraging strong community involvement for content moderation. Platforms like Reddit use dedicated moderators, while League of Legends fosters an honor system. Despite their effectiveness, these methods have been expensive, challenging, and far from perfect.

With LLMs, achieving results comparable to those of moderation teams is now possible reach for almost anyone. Moderation has become much more accessible with simple prompting, opening doors to new business opportunities. By integrating structured workflows and robust ETL processes, the quality and scalability of moderation can greatly improve.

However, there’s still much to learn about optimizing prompts and workflows for effective data extraction, as this isn’t a native behavior of LLMs. Still, today’s results are remarkable, and specialized LLMs for data extraction will simplify these tasks even further in the future.

Cheaper Content Moderation - Social Media Industry

Social media companies depends on user-generated content, including text, images, and audio. They face challenges in filtering harmful material like violence, sexual content, threats, illicit activities, and spam, while simultaneously encouraging user engagement.

Historically, keyword searches have been a simple, cost-effective moderation tool. However, users often find creative ways to bypass these filters. For instance, mental health discussions rely on context-sensitive language, making detection by keyword systems ineffective.

uri = URI('https://api.openai.com/v1/chat/completions')

headers = {

'Content-Type': 'application/json',

'Authorization': "Bearer #{api_key}"

}

prompt = <<-PROMPT

You are a content moderator reviewing a post to determineif has Mental Health Struggles or not.

Provide the reasoning behind your score and rate it on a scale from 1 to 10,

where 1 indicates it is probably not discussing , and 10 indicates it is likely discussing.

Return the json object: {score: Number, reasoning: String}

PROMPT

user_message = 'Feeling stuck in a dark place'

data = {

model: 'gpt-4o-mini',

messages: [

{ role: 'system', content: prompt },

{ role: 'user', content: user_message }

],

response_format: { type: 'json_object' }

}

response = Net::HTTP.post(uri, data.to_json, headers)

puts JSON.parse(response.body)['choices'][0]['message']['content']

# {

score: 8,

reasoning: "The phrase 'stuck in a dark place' typically indicates a feeling of being trapped..." }

Categorization by Product Description - Marketplace Industry

In any marketplace, the products displayed on the platform serve as its showcase. poorly written, miscategorized, or inadequately presented products can negatively impact conversion rates or, even worse, damage the marketplace’s overall reputation. To address this issue, many platforms use an approval process or reputation system to reduce low-quality content.

For generic marketplaces like Amazon or Facebook, product listings cover a vast range. This variety often includes low-quality postings with generic titles, inaccurate descriptions, or all-uppercase text, or worst. Reviewing every listing manually would require significant resources, driving up costs and reducing the marketplace’s competitiveness.

One alternative is to use existing data to cross-verify listings and generate consistent information. For example:

uri = URI('https://api.openai.com/v1/chat/completions')

headers = {

'Content-Type': 'application/json',

'Authorization': "Bearer #{api_key}"

}

prompt = <<-PROMPT

You are a product classifier. Based on the product description, classify the product into any applicable categories

PROMPT

user_message = 'product description: Elevate your culinary experience with our Handcrafted Bamboo Cutting Board! Perfect for both novice cooks and seasoned chefs, this beautiful cutting board combines functionality with an eco-friendly design. Made from 100% natural bamboo, it’s not only sustainable but also adds a touch of elegance to your kitchen.'

data = {

model: 'gpt-4o-mini',

messages: [

{ role: 'system', content: prompt },

{ role: 'user', content: user_message }

],

response_format: {

type: 'json_schema',

json_schema: {

name: 'category_matcher',

schema: {

type: "object",

properties: {

category: {

type: 'string',

enum: ['kitchen', 'eletronic', 'house']

},

subCategory: {

type: 'string',

enum: ['Coffee Makers and Kettles', 'Pans', 'Household Utensils']

}

},

required: ['category', 'subCategory'],

additionalProperties: false

},

strict: true

}

}

}

response = Net::HTTP.post(uri, data.to_json, headers)

puts JSON.parse(response.body)['choices'][0]['message']['content']

# {"category":"kitchen","subCategory":"Household Utensils"}

Data Extraction as a New Tool

This technique is not perfect accuracy and will require continuous reevaluation since its effectiveness can vary significantly depending on the LLM model version, prompt, and the quality of user inputs. Despite these inconsistencies, it already delivers real value to businesses.

Data extraction often involves adjusting prompts, transforming user content, or, as a last resort, creating a fine-tuned version. These adjustments are simple to learn but hard to master. However, recent years have seen a significant drop in costs and a dramatic rise in accuracy, making the process far more easily.

These examples barely scratch the surface. With substantial budgets, rich categorization, workflows, risk scoring, contextual analysis beyond user inputs, and fine-tuning, LLMs could potentially match or even surpass human-level accuracy. That is what I expect to see in the near future.

It is evident that data extraction is a tool that is here to stay, but like any tool, it must be used appropriately. Misuse can undermine its effectiveness, causing the LLM to perform unpredictably, as random as a lottery. Success lies in understanding its limitations and leveraging it effectively.

Limitations

It’s well-known that LLMs can "hallucinate" and produce incorrect or inconsistent data. These issues are inherent to the technology; no LLM is immune, and it’s unlikely that one ever will be. Instead of overreacting or blaming the model, we can use specific techniques to achieve more consistent results:

- Ask for Clarification: Request reasoning behind extracted data before presenting the final answer. This approach encourages the model to align future tokens with the reasoning provided.

- Limit Context Overload: While LLMs now support larger context windows, providing too much context can create a "needle in a haystack" problem. Use minimal context whenever possible, and filter, summarize, or transform input text beforehand to improve results.

- Avoid Extracting Too Much Data at Once: Attempting to extract large amounts of data in a single query can cause inaccuracies or bias, as one piece may influence another. Focus on related data groups, such as first and last names or categories and subcategories. Make multiple requests if needed, applying the single-responsibility principle leads to better accuracy.

- Avoid Calculations: Do not rely on LLMs for calculations. Think of an LLM as similar to your phone’s keyboard; asking it to calculate is like typing "1+1" on your phone and accepting the next suggestion. Extract raw data and handle calculations externally. For example, if the text provides a price in another currency but you need it in dollars, extract the raw price and currency, then convert it outside the LLM. Avoid shortcuts, even if they seem to work at first.

- Handle Empty Responses Carefully: Only extract data when you are confident it exists in the text. For instance, if you ask for the color of a car but no car is mentioned, the LLM might return a random color or "none." Verify that the data is present before extracting it, if possible.

- Account for Values Outside Enum Lists: When working with enum lists, if the correct value is missing, the LLM might choose an incorrect option from the list. To prevent this, include alternatives like "other" or "none" to avoid overfitting and ensure more accurate responses.

- Be Creative: Use preliminary prompts to extract simpler data before attempting to gather complex information. Rephrase user input to remove unnecessary details, and ask for the same information in different ways. There is no universally perfect approach, so experiment to find what works best for your needs and share your findings!

If your problem cannot be addressed by these tips, you might not be using the right tool. LLMs have a wide range of effectiveness; they can be a perfect fit or result in disaster. Proper implementation and problem selection are what position LLMs for success. Remember, there is no silver bullet in technology.

The Role of Software in Scaling AI

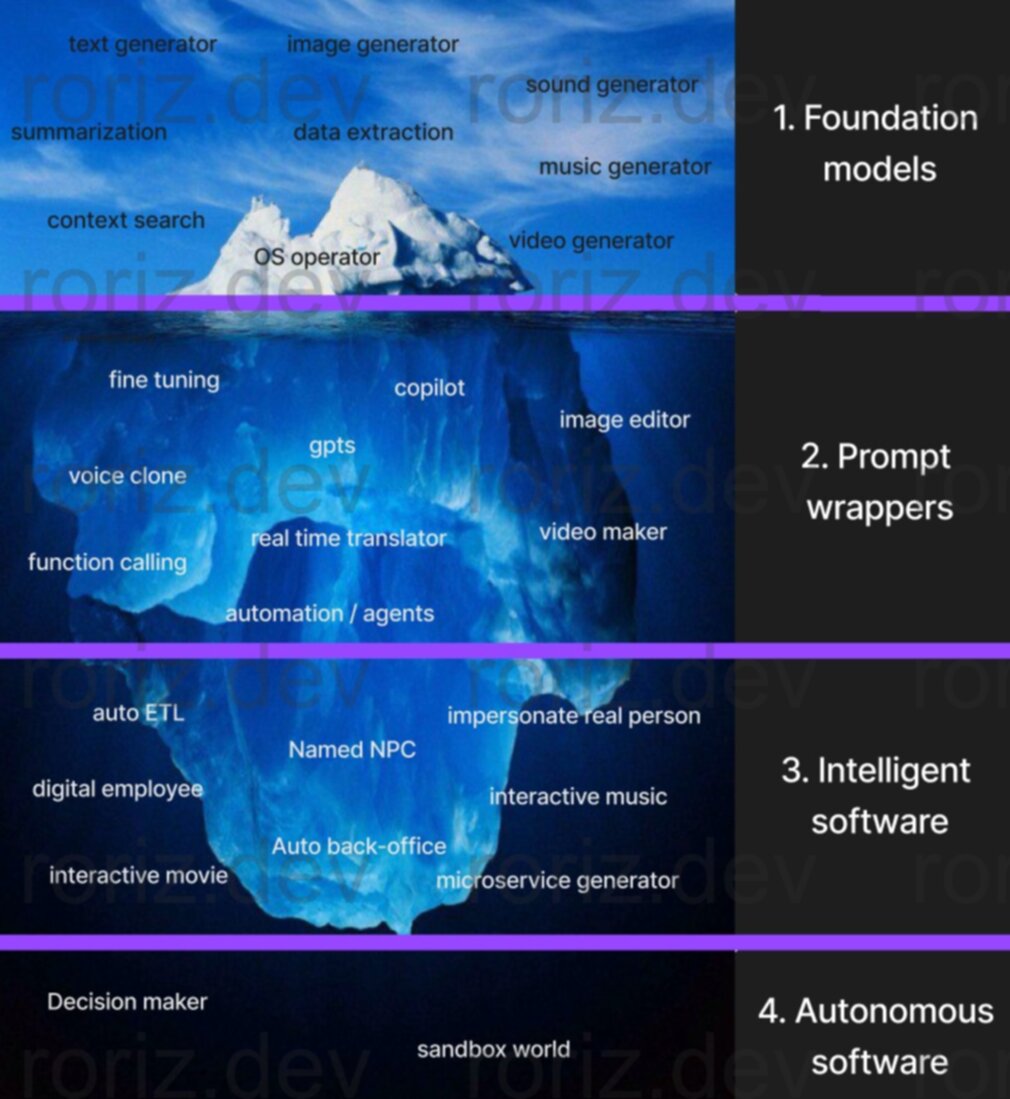

We are just beginning to explore the potential of allying AI+Engineer. Right now, almost all solutions are closely tied to foundation models. While many companies are working on second-level solutions, including those that owns the foundation models, we have yet to see clear dominant players emerging in this space.

As we go deeper into AI applications, the need for functionalities, consistency, integrations, and business rules becomes more evident. These are challenges that only software can fully address today.

Image 2: Iceberg of potential AI fields, starting with 1. foundation models, 2. prompt wrappers, 3. intelligent software, and 4. autonomous software.